Porter Novelli’s learning and development culture emphasizes adaptability, curiosity, and bringing diverse perspectives to the table. That lens shaped how I experienced a recent course on generative AI and large language models. What stood out most wasn’t the sophistication of the models, but how interdisciplinary the group was and how integral it is for communicators to experiment with the technologies reshaping our industry so that we can be more effective advisors to our clients.

Influence is shifting upstream

Most conversations about AI in pharma focus on speed, scale, and efficiency. But the more profound shift is quieter. AI is not only changing how work gets done. It is changing how meaning is created.

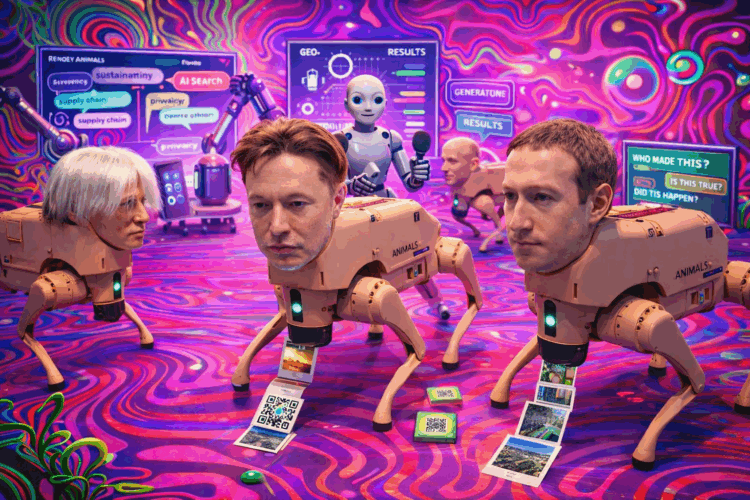

It’s no longer only about crafting the perfect message for a press release, a social post, or a media briefing. It’s also about shaping the inputs that increasingly generate, summarize, and interpret information across the ecosystem. In pharma, this shift is uniquely high stakes. Research from the Icahn School of Medicine at Mount Sinai shows that health misinformation directly affects patient behavior, treatment adherence, and public trust. Studies suggest that 1 in 3 individuals are using ChatGPT to manage their health and wellness, making it critical to address the risk of misinformation in AI-generated results. A misleading explanation is not just a reputational issue. It can become a clinical one.

The recent rollout of ChatGPT Health further underscores this shift. Generative AI is no longer adjacent to healthcare decision-making. It is increasingly positioned as an interface through which people seek, interpret, and act on health information.

That makes pharma a special case. Not because communicators should avoid AI, but because they must approach it with more structure, governance, and human oversight than most industries.

At Porter Novelli, we are already helping clients operationalize this shift through our Precision Narraitive approach. Rather than focusing only on AI outputs, Precision Narraitive helps brands understand and intentionally shape the language, sources, and framing that large language models increasingly draw from to generate and interpret health information. By identifying where meaning is being formed and adjusting those inputs with human oversight, we help ensure accuracy, veracity, and alignment in an AI-mediated ecosystem.

Communications belongs at the center of this shift, not at its edges

In consumer categories, inaccurate output is inconvenient. In healthcare, it can be dangerous.

Pharma organizations operate in an environment where:

- Accuracy is not optional.

- Content is subjective.

- Trust is not elective, it’s existential.

- And misinformation is not just reputational risk, but regulatory and ethical risk.

In the course, one theme came up repeatedly: generative AI is extremely capable and extremely literal. It reflects the quality, clarity, and bias of what it is given. It will confidently generate something that sounds right even when it isn’t. AI results, unlike Google results, don’t translate into a clickthrough where users can interrogate the content. By removing that clickthrough, a key friction point is reduced, which leads to a less anchored evaluation of health topics. If communications isn’t part of how AI systems are designed, governed and deployed, organizations risk losing control over their narrative.

What pharma companies must do now

To become leaders in influencing AI results through generative engine optimization, pharma organizations must move deliberately from experimentation to institutional readiness.

In pharma, this means differentiating approaches depending on where a product is in its lifecycle: messaging guidance for an approved product carries different regulatory and ethical considerations than content around a pipeline asset still in development.

From outputs to inputs

The new question isn’t just “What should we say?” but “What guidance are we embedding into the systems that will speak on our behalf?”

From speed to stewardship

The value isn’t how fast you can generate content, it’s how reliably you can ensure it is accurate, responsible and aligned with your standards.

From content to credibility

In a world of infinite content generation, trust becomes a scarce resource. Organizations that win won’t be the loudest, they’ll be the most reliable. PR functions to inform and influence understanding, perspectives, and favorability, naturally a leading discipline to deliver generative engine optimization.

For a pharma brand to be the most reliable, it must identify the sources that carry the authority and credibility across generative engines and curate content that ensures preferred messaging informs how those systems synthesize content for any user.

The bottom line

We are in a new AI era not because machines became smarter, but because they became expressive. They now operate at the level of language, interpretation, and explanation – the same level at which trust is built or lost. This shift raises questions about the reliability of sources that have historically served as authorities. Thus, in the era of diminishing influence for the historic standard-bearers of trust, the rise of AI’s influence over individuals’ health journeys has created new urgency for communicators to learn about generative AI, how it works, and how their strategies can effectively generate output for good.

That is why this moment demands more than technical readiness. It demands narrative readiness and a commitment to accuracy.

In a world where machines increasingly help tell the story of care, the pharma organizations that lead in generative engine optimization will be the ones that decide, intentionally and ethically, what that story is.