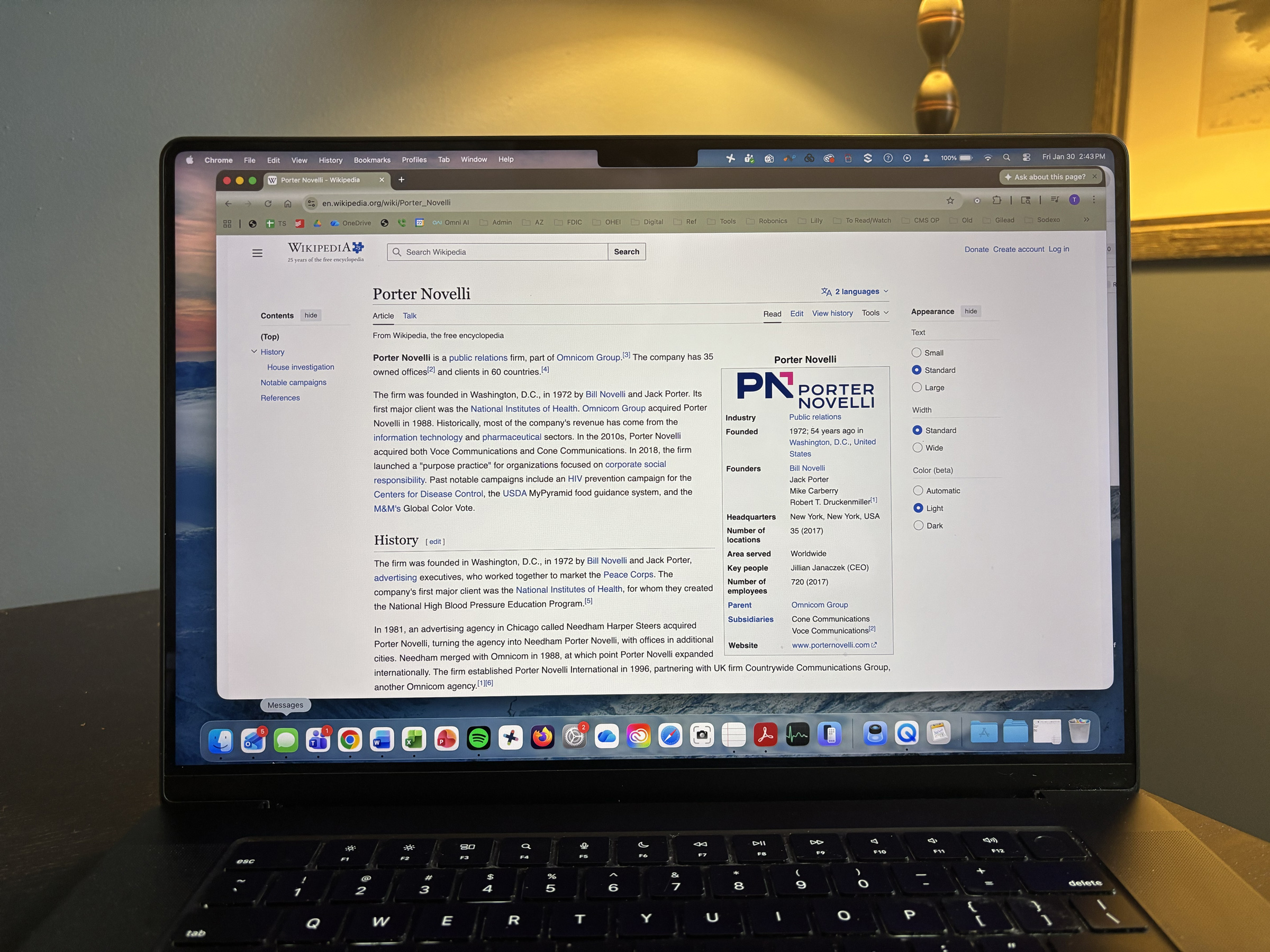

Wikipedia’s New Deal with LLMs Signals Its Deepening Role in AI-Driven Brand Perception

By: Mary Gaulke, Account Manager at Porter Novelli

A new deal between the Wikimedia Foundation and major AI firms is increasing Wikipedia’s influence on AI search summaries and LLM (large language model) responses. This creates a critical opportunity for organizations to ensure that Wikipedia tells their story accurately, grounded in quality sources and relevant details that AI will share with curious users in a variety of contexts.

Since the inception of general-knowledge LLMs, Wikipedia has become a uniquely trusted signal in their training because it is human-written, consensus-driven, and heavily moderated for accuracy and quality control. Now, the Wikimedia Foundation (which administers Wikipedia and its related projects) has signed enterprise licensing deals with major AI firms (including Microsoft, Meta, Amazon, Perplexity, and Mistral), ensuring that Wikimedia’s Enterprise APIs feed Wikipedia, Wikidata, and related projects directly into AI systems’ semantic layers. More than ever, Wikipedia content is shaping how models connect concepts, decide what matters, and choose citations.

WHAT’S CHANGING

- From one source to core infrastructure: Wikipedia is no longer just one scraped web source; it is being integrated as a primary knowledge spine for LLMs.

- Brand perception via AI: What’s in (or missing from) related Wikipedia articles increasingly dictates how AI answers questions about your company, products, and issues.

OPPORTUNITIES FOR YOUR BRAND

- Shape AI-era reputation: Thoughtful improvements aligned with Wikipedia’s policies ensure accurate, balanced, and complete coverage that LLMs will repeatedly learn from and reference.

- Clarify complex topics: For technical, regulated, or emerging areas, Wikipedia is a powerful way to define key concepts and connections that LLMs will rely on.

- Proof in market: After Porter Novelli expanded the article for a pharmaceutical client’s investigational therapy from a two-line stub into a full, sourced entry, LLM responses on the drug shifted dramatically: they now cite the page extensively to surface accurate and contextualized details prioritized in our updates to the page.

RISKS TO WATCH OUT FOR

- Outdated or incomplete coverage: Gaps or inaccuracies on Wikipedia now echo directly into AI responses, scaling misinformation or stale narratives.

- Ceded narrative control: If critics, competitors, or outdated sources define the consensus version of your brand on Wikipedia, that’s the version LLMs will propagate.

- Potential backfire from improper engagement: Edits that contravene Wikipedia guidelines or its terms of service (including undisclosed paid edits) can spark backlash, resulting in harsher scrutiny and more prominent negative content. Over the past 10+ years, PN has built deep expertise and a positive reputation on Wikipedia, helping brands avoid any potential faux pas by approaching edits with a focus on consensus-building and reputable sourcing.

WHAT SHOULD YOU DO NEXT?

- Evaluate how you show up in AI responses: Porter Novelli has proprietary tools to review how leading LLMs describe your organization, leaders, products, and category, and flag misalignments or omissions.

- Audit your Wikipedia footprint: Review relevant and frequently cited articles for accuracy, completeness, sourcing, and alignment with current reality across brand, product, executive, and category pages.

- Engage via consensus-building: Foolhardy engagement with Wikipedia can cause a lot of extra headache, but PN has found extensive success approaching the Wikipedia editor community with a sustained, policy-compliant strategy grounded in trusted, independent media coverage.